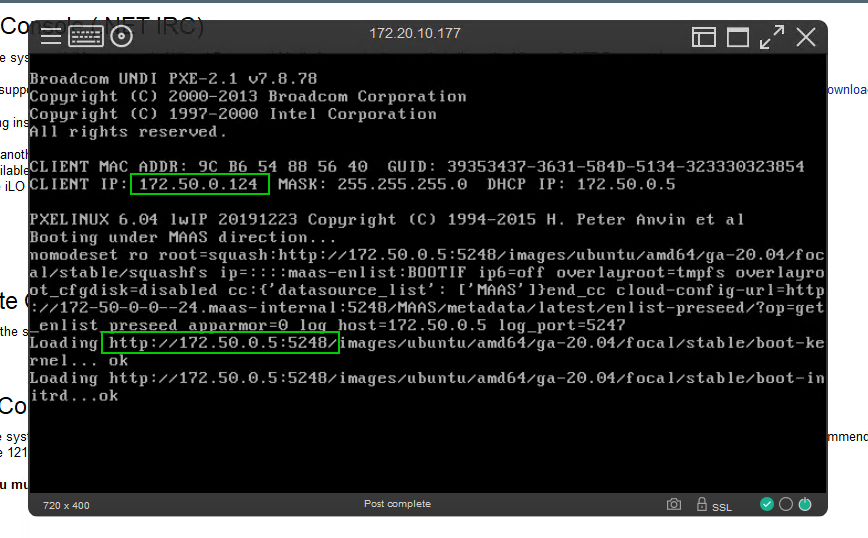

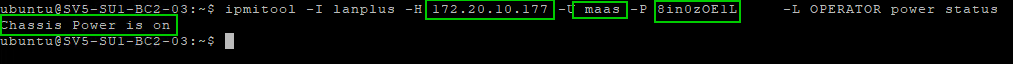

In a previous blog, MAAS (Metal-as-a-Service) Full HA Installation — Crying Cloud we deployed MAAS controllers to manage on-premise hardware. Let’s explore using the Azure platform to see what we can do with Azure Arc and Azure Automanage to monitor and keep our Metal-as-a-Service infrastructure systems operational.

From Azure Arc, we want to generate an onboarding script for multiple servers using a Service principal Connect hybrid machines to Azure at scale - Azure Arc | Microsoft Docs for Linux servers. We can now run the script on each of the Ubuntu MAAS controller

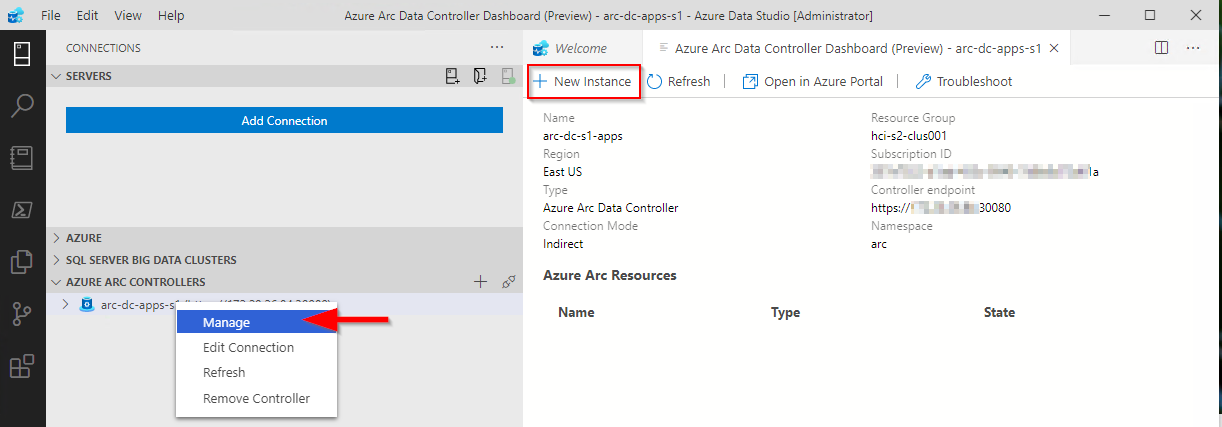

We now have an Azure Resource that represents our on-premise Linux server.

At the time of writing this if I use the ‘Automanage’ blade and try to use the built-in or Customer Automanage profile an error is displayed “Validation failed due to error, please try again and file a support case: TypeError: Cannot read properties of undefined (reading 'check')”

Try as I might, I could not get past this error. However, by going to each individual Arc server resource we can enable ‘Azure best practices - Dev / Test’ individually.

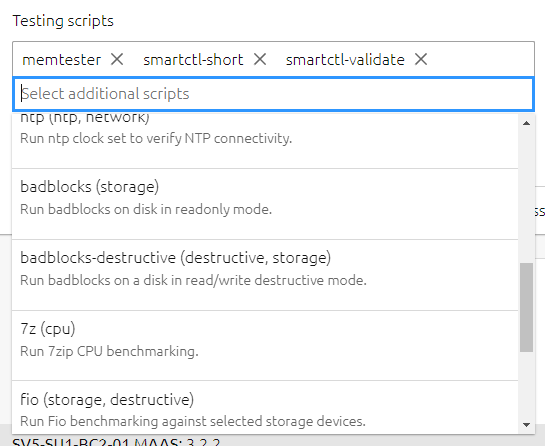

At the time of trying this Automanage is still in preview and I could not create and add a custom policy. For we can move ahead with the ‘Dev / Test’ policy which still validates the Azure services we want to enable.

Using the blade we can still see the summary activity using the ‘Automanage’ blade

I was exploring some configuration settings with the older agents and the Automanage and looks like some leftover configuration issues persist.

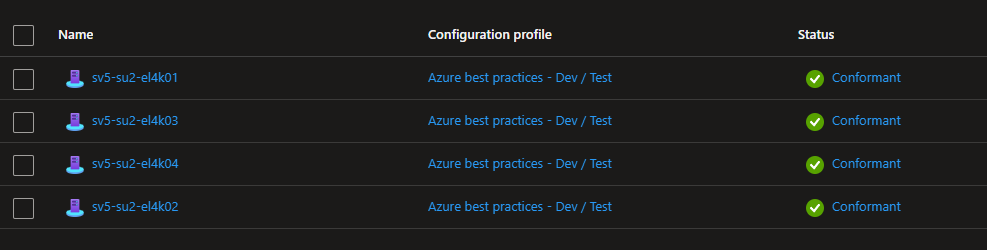

After removing the OMSForLinuxAgent and reinstalling the Arc Connected agent all servers showed as Conformant

As we build out lab more of the infrastructure we will continue to explore the uses of Azure Arc and Azure Automanage