How long would it take to deploy 100+ physical servers with Ubuntu, create a microK8s cluster and connect it to Azure using Azure Arc?

This post walks through the process of creating and connecting a MicroK8s cluster to Azure using Azure Arc, MAAS, Ubuntu, and Micok8s.

First need a primary server to start the K8s cluster so let’s deploy one

Lets create a Tag ‘AzureConnectedMicroK8s’ so we can keep track of the servers we are deploying

While we are waiting for the deployment let’s head over to MicroK8s - Zero-ops Kubernetes for developers, edge and IoT website, and let’s look up the commands for creating a MicroK8s cluster using snaps and we want to use a specific version 1.24

sudo apt-get update

sudo apt-get upgrade -y

sudo snap install microk8s --classic --channel=1.24

sudo microk8s status --wait-readyWe need to extend the life of the join token so we reuse it for multiple servers MicroK8s - Command reference shows we do this with —token-ttl switch

sudo microk8s add-node --token-ttl 9999999I want to use the cloud-init commands with MAAS to deploy the Ubuntu and join it automatically to the microK8s cluster, so lets build that script. We will try two one the pull updates and one that doesn’t. Let’s Tag these 2 new nodes we are adding first.

#!/bin/bash

sudo apt-get update

sudo apt-get upgrade -y

sudo snap install microk8s --classic --channel=1.24

# updated content to include a random delay for adding lots of nodes

randomNumber=$((10 + $RANDOM % 240))

sleep randomNumber

sudo microk8s join 172.30.9.31:25000/8a4fb96dd8c711aaa895ba0da2e0dd91/fb637a6f6f64We can check the deployment and look for the additional nodes using kubectl command

# let’s create a Kube config file

mkdir ~/.kube

sudo microk8s kubectl config view --raw > $HOME/.kube/config

sudo usermod -aG microk8s $USER

# fix permissions for Helm warning

chmod go-r ~/.kube/config

sudo snap install kubectl --classic

kubectl version --client

kubectl cluster-info

# Add auto completion and Alias for kubectl

sudo apt-get install -y bash-completion

echo 'source <(kubectl completion bash)' >>~/.bashrc

echo 'alias k=kubectl' >>~/.bashrc

echo 'complete -o default -F __start_kubectl k' >>~/.bashrc

# need to restart session to use for alias

kubectl get nodes

# using k Alias

k get nodes

k get nodes -o widelets get K8s dashboard running

sudo microk8s enable dashboard ingress storage

# add MAAS DNS servers

sudo microk8s enable dns:172.30.0.30,172.30.0.32

# DNS trouble shooting

# https://kubernetes.io/docs/tasks/administer-cluster/dns-debugging-resolution/

k -n kube-system edit service kubernetes-dashboard

# change change type from ClusterPort to NodePort ~approx line 35

# save this token for later use

k create token -n kube-system default --duration=8544h

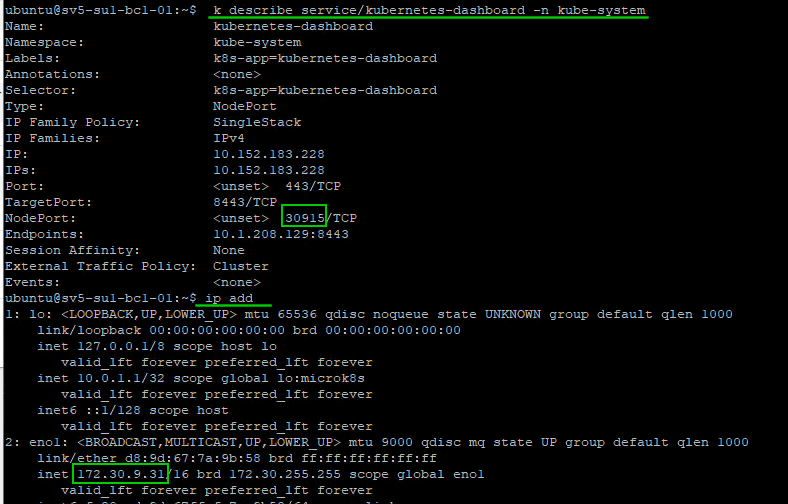

k describe service/kubernetes-dashboard -n kube-systemNext, let’s deploy some workers from MAAS and tag the servers accordingly

#!/bin/bash

sudo apt-get update

sudo apt-get upgrade -y

sudo snap install microk8s --classic --channel=1.24

sudo microk8s join 172.30.9.31:25000/8a4fb96dd8c711aaa895ba0da2e0dd91/fb637a6f6f64 --workerWhile waiting for there workers I am going to use choco to install octant. “choco install octant”. Create a copy of the linux kube-config. Creating a local ‘config’ file on my windows desktop in the user\.kube directory and then running octant.exe we can then access the dashboard via http://127.0.0.1:7777 which shows us the new workers we have just added.

Let’s work to connect the cluster to Azure using the following guide Quickstart: Connect an existing Kubernetes cluster to Azure Arc - Azure Arc | Microsoft Docs. First lets make sure the resource providers are registered

# Using Powershell

Get-AzResourceProvider -ProviderNamespace Microsoft.Kubernetes

Get-AzResourceProvider -ProviderNamespace Microsoft.KubernetesConfiguration

Get-AzResourceProvider -ProviderNamespace Microsoft.ExtendedLocationAdding a role assignment to my user account for ‘Kubernetes Cluster - Azure Arc Onboarding’ and ‘Azure Arc Kubernetes Cluster Admin’. Depending on your onboarding account and process this may vary.

# run on master node

sudo microk8s enable helm3

sudo snap install helm --classic

# install Azure CLI

curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

az login

# User browser devicelogin code

az account show

# use 'az account set --subscription subname' to ensure you have correct subscription

az extension add --name connectedk8s

az extension add --name k8s-configuration

# using an existing resource group

az connectedk8s connect --name sv5-microk8s --resource-group ArcResources

# trouble shooting

# https://docs.microsoft.com/en-us/azure/azure-arc/kubernetes/troubleshooting#enable-custom-locations-using-service-principalWhile this is deploying you can open another connection and run

“k -n azure-arc get pods,deployments”

if Azure Arc is experiencing any errors trying to create the deployments you can query the pod logs using “k -n azure-arc logs <pod-name>”

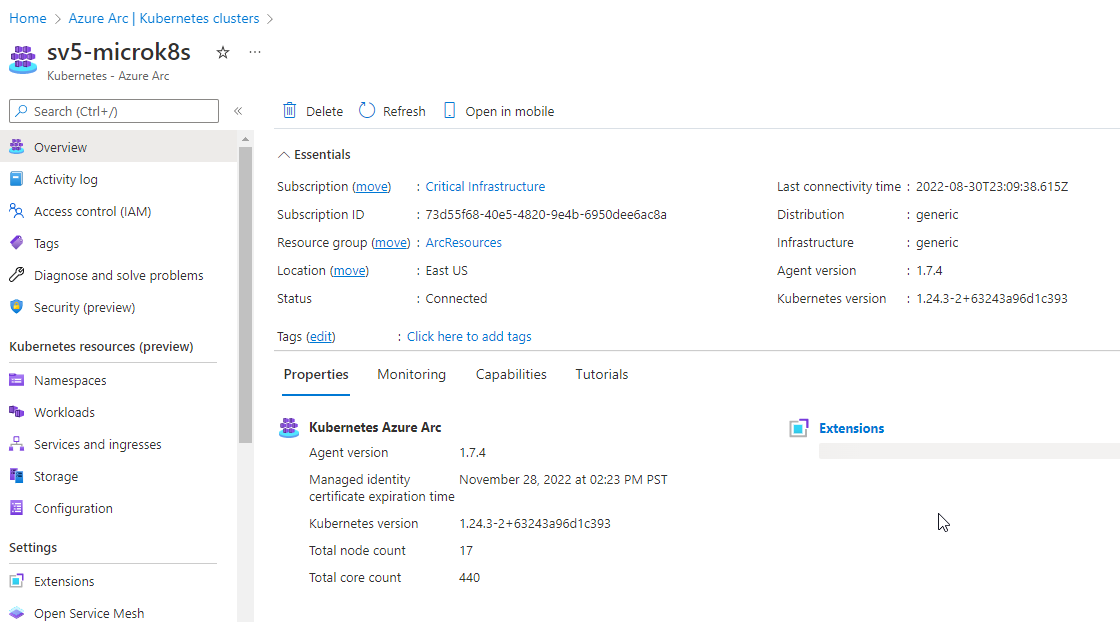

Checking Azure Resource Group we can see the resource being created and can see the total node count of 7, and the total core count of 160.

using the token we saved you can now sign into the on-premise cluster from Azure

You can now explore the namespaces, workloads, services, etc from the Azure Portal

Lets scale this out and show you how easily you can create a large-scale K8s cluster using your old hardware managed by MAAS and connected to Azure using Azure Arc. Using the same work join command as before in the cloud-init script lets deploy 10 new physical servers from MAAS and add them directly to the K8s cluster as workers.

And we can see the node count has increased to 17.

I do expect some failures here with servers as we are still onboarding some hardware and cleaning up issues but let’s grab all the ready servers and see how well Ubuntu, MAAS, MicroK8s, and Azure Arc can handle this scale out.

There Obviously adding that many servers can stress the system. Some errors around pulling the images for the networking, but just leaving them for a few hours they self-corrected and completed the deployment, and joined the cluster successfully.

We do have some internal MTU sizing issues in the switches so not all hosts were added. Looking at the Daemon sets under workloads we can see the calico and nginx pods 121 ready out of 121 deployed.

Looking at the node count we have 121 nodes added and 5452 CPU cores ready to work. That’s a lot of power you can now leverage to run container-based deployments.

Overall this was fairly easy to reuse a wide range of new and old hardware and create a large Kubernetes cluster and connect it to the Azure Portal via Azure Arc. This cluster could be used for a wide range of services including custom container hosting or Azure delivered services like Azure SQL or Machine learning.

We will continue to explore Azure Arc-connected services.

Explore

Quickstart: Connect an existing Kubernetes cluster to Azure Arc - Azure Arc | Microsoft Docs

MAAS (Metal-as-a-Service) Full HA Installation — Crying Cloud

Configure Kubernetes cluster - Azure Machine Learning | Microsoft Docs

Supporting Content