Out of the box, AKS Edge Essentials does not have the capability to host persistent storage. Thats OK if you're running stateless apps, but more often than not, you'll need to run stateful apps. There are a couple of options you can use to enable this:

- Create a manual storage class for local storage on the node

- Create a StorageClass to provision the persisent storage

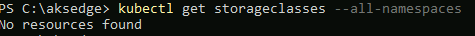

First, I'm checking no existing storage classes exist. This is on a newly deployed AKS-EE, so I'm just double checking

kubectl get storageclasses --all-namespaces

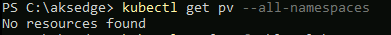

Next, check no existing persistent volumes exist

kubectl get pv --all-namespaces

Manual storage class method

create a local manual persistent volume

Create a YAML file with the following config: (local-host-pv.yaml)

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"Now deploy it:

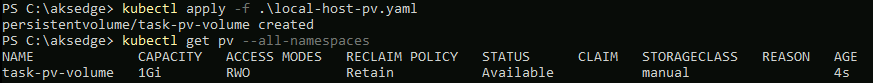

kubectl apply -f .\local-host-pv.yaml

kubectl get pv --all-namespaces

Create persistent volume claim

Create a YAML file with the following code: (pv-claim.yml)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100MiNow deploy it:

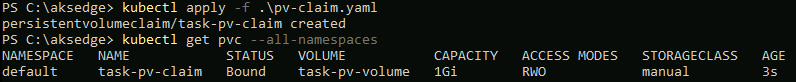

kubectl apply -f .\pv-claim.yaml

kubectl get pvc --all-namespaces

The problem with the above approach!

The issue with the first method is that the persistent volume has to be created manually each time. If using Helm charts or deployment YAML files, they expect a default Storage Class to handle the provisoning so that you don't have to refactor the config each time and make the code portable.

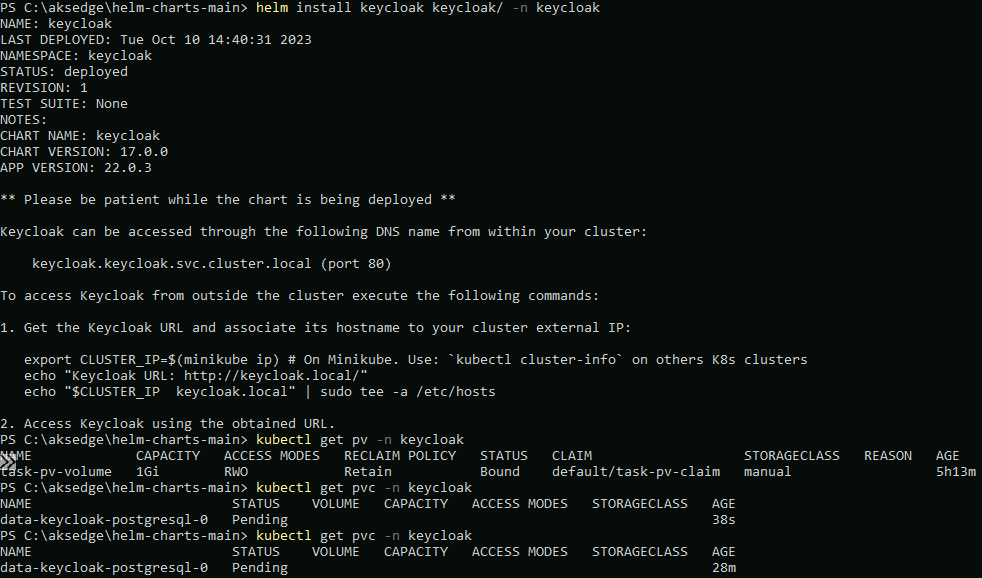

As an example to show the problem, I've tried to deploy Keycloak using a Helm chart; it uses PostgreSQL DB which needs a pvc:

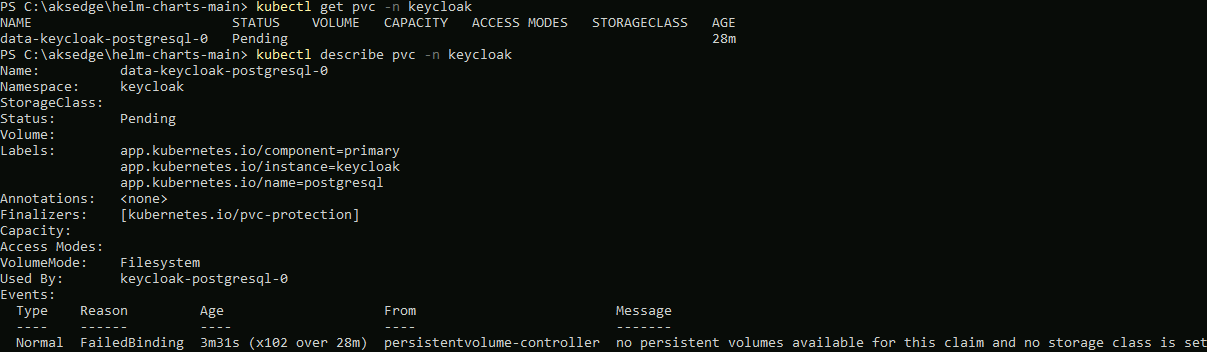

Using kubectl describe pvc -n keycloak, I can see the underlying problem; the persistent volume claim stays in pending because there are no available persistent volumes or Storage Classes available:

Create a Local Path provisioner StorageClass

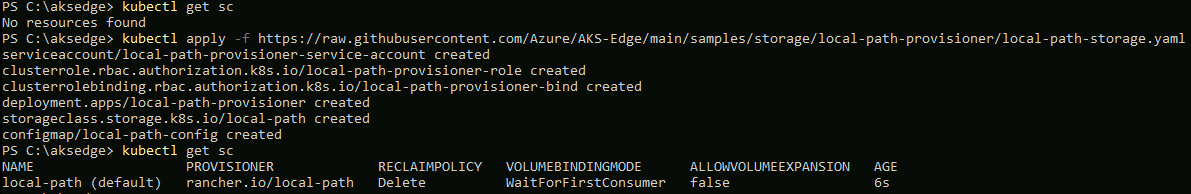

So, to fix this, we need to deploy a storage class for our cluster. For this example, I'm using the Local Path provisioner example.

kubectl apply -f https://raw.githubusercontent.com/Azure/AKS-Edge/main/samples/storage/local-path-provisioner/local-path-storage.yamlOnce deployed, you can check that is exists as StorageClass:

kubectl get sc

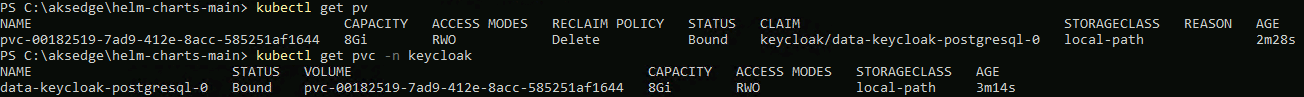

Once the storage class is available, when I deploy the helm chart again, the persistent volume and claim are created successfully:

kubectl get pv

kubectl get pvc --all-namespaces

Conclusion

My advice is as part of the AKS Edge Essentials installation is to deploy a StorageClass to deal with provisioning volumes and claims to handle persistent data. As well as the Local Path provisioner, there is an example to use NFS storge binding