Anyone who has been running and operating Azure Stack Hub for a while is hopefully aware that there are a number of activities that need to take place to keep it up to date and supported. The most obvious one is updating the core infrastructure using the integrated update process. It’s made easier in that the main dashboard on the admin portal for your stamp informs you of the update status - if it’s up to date or if there’s an update to apply. When it comes to additional Resource Providers, you have to do the work your self. This post details how to fix the update process for the SQL and MySQL RP’s for systems running version 1910.

I’m making the assumption that you have already deployed the RP’s previously, and have access to the pfx file that contains the sqladapter certificate for your stamp.

Version 1910 of Azure Stack Hub requires version 1.1.47.0 of the SQL & MySQL Resource Providers.

The first thing you will need to do is download the respective RP install binaries:

SQL RP can be found here: https://aka.ms/azurestacksqlrp11470

MySQL RP can be found here: https://aka.ms/azurestackmysqlrp11470

Extract the contents of the downloaded file(s) on a system that has access to the PEP and also has the compatible Azure Stack Hub PowerShell modules installed for Azure Satck Hub 1910: https://docs.microsoft.com/en-us/azure-stack/operator/azure-stack-powershell-install?view=azs-1910

Create a directory in the location where you extracted the RP files called cert. You will need to copy the pfx file containing the certificate for the SQL adapter into this directory. This step is the same for both SQL and MySQL.

We need to modify the common.psm1 file in the Prerequisites folder, similar to an earlier blog post on deploying the RP (based on 1.1.33.0)

Taking a look at the file shows us the prerequisites for the function:

It expects the Azure RM module version to be 2.3.0 and that the installation path for the modules to be \SqlMyslPsh.

If following the official instructions for deploying the Azure Stack PowerShell module compatible with 1910, this will never work, so we need to modify the Test-AzureStackPowerShell function.

Change the $AzPshInstallFolder on line 82 from "SqlMySqlPsh" to "WindowsPowerShell\Modules"

Change the $AzureRmVersion on line 84 from "2.3.0" to "5.8.3"

We need some further changes to the function to ensure that the correct path for the module is evaluated:

Locate line 118/119 in the module.

We need to modify the path so it reflects that of the loaded module. Change the "AzureRM" to AzureRM.Profile", as highlighted in the images below.

N.B. - The instructions for the fix are also applicable for new SQL & MySQL Resource Provider deployments, as they use the common.psm1 module too.

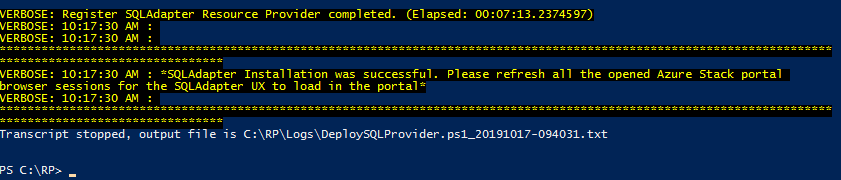

Save the file and using the update script example tailored to your environment, the update should now work.

You should see status messages like below:

Overall, it takes approximately 50 minutes to run through the update.